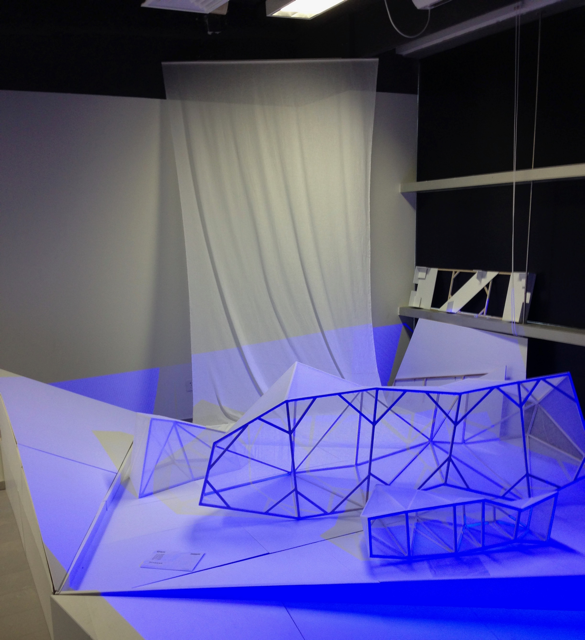

ZEN AN INTERACTIVE INSTALLATION

WHAT IS 'ZEN'

Zen (Chinese: 禅; pinyin: Chán) is a school of Mahayana Buddhism that originated in China during the Tang dynasty as Chan Buddhism.

The landscpae painting, a Chinese traditional art style, demonstrates the spirit of Zen in various perspectives. We also took water as another core element of the whole installation.